Immersive audio is poised to take the industry by storm. It’s no wonder, since so much of music is consumed over headphones, that we’d see a shift towards making music that embraces the unique characteristics of the listening device to provide an experience beyond the stereo one we’ve known for the past, wait, how long has stereo been around? The 1930s??? Sure, we’ve had surround for a while too, but how often do you hear music outside of niche classical recordings and film scores that utilize proper surround mixes? Most people want their music to go with them, and you can’t lug around 5.1 speakers and a receiver to set them all up to go type at a coffee shop. Streaming has given us the ultimate portability, and we need our playback devices to follow suit, so headphones, and thus stereo it is!

Stereo audio gives us a very expansive soundstage, despite it being technically only one dimensional. You can cram a lot of information into the various pan points, crafting complex and nuanced and lively recordings. You can add depth by playing around with delay and EQ (the farther away something is, the less high frequency content it’ll have). But with all that it’s still sound being played out of two different sound sources at varying levels of volume, and that’s not how the human brain hears. Our brains have the ability to pinpoint the location of a sound in three dimensions. We can hear if it’s on the left or the right, but we can also hear how far away it is, whether it’s in front or behind us, even whether it’s above or below us. We do this using a variety of nifty ways. There’s the Haas effect, which is the small delay between when one sound hits one ear vs. the other. If a sound is in front of us, it’ll hit both ears at once. If it’s to the left, it’ll hit the left ear before it hits the right. This is one of the ways our brain determines relative position. There’s also your unique head related transfer frequency (HRTF). This is how your body affects the sound as it bounces around and enters your ears. If a sound is on your left, it’s going to have a different frequency content when it enters your right ear. Your brain is able to interpret these timing and frequency changes in order to create a detailed understanding of not only the position of objects, but the actual size and shape of the space you are in! With that understanding, it’s only natural that us in the audio biz want to play around with it.

You may already have a Haas plugin on your computer. If you signed up for the Slate bundle, you have one. You can recreate one using any detailed delay by simply using a delay time of less than 1ms. This will induce phasing, so bear that in mind, but you can absolutely use how the brain works to play around with your sense of space. But combine Haas and HRTF, which as processing power increases we’re able to do, and you get this new toy that may end up being the next great shift in music: binaural audio!

I was first exposed to binaural audio through ASMR, that delightful head tingly weird sound whisper trend that either makes you feel amazing or doesn’t do squat. For those videos a popular mic choice was a 3dio, which is two omni lav mics stuck in silicone ears spaced roughly the distance apart they’d be for an average person. This helps recreate the Haas effect, as well as part of the HRTF, giving you a good enough recreation of what it actually sounds like to have someone whisper in your ear while cutting your hair (higher end versions, like the Neumann KU100, actually have a dummy head for more accurate capture).

Done right, the effect can transcend the uncanny valley and actually give you the impression that you’re in the room with the sound happening all around you. Done wrong, though, and things just start to sound weird. Both the Haas effect and HRTF is very specific to any one person’s physiology. Your brain has had a lifetime to learn the intricacies of your ears and head and hone its ability to pinpoint spatial sound, but it only does so based on your own very unique set of physical properties. If you try and recreate this effect, whether through physical means, like a binaural microphone, or through software, you’re going to get an approximation, but not accuracy, and your brain is going to pick up on it. This makes any binaural sound a crap shoot: If the effect is accurate to an individual, then it’ll sound amazing. If it is inaccurate, then it’ll sound weird.

I’ve been playing with binaural effects in my mixes for years now. Ever since stumbling on the aforementioned ASMR videos, I’ve wondered how I could translate something that sounds so unique and cool into the music realm. After doing some research, I landed on Sennheiser’s AMBEO plugin (now rebranded as dearVR). First, it was free. Always love free stuff. It gave me rudimentary controls over the position and width and even height of the source (and also some reverb, but I tend to turn that off). No distance control, but I know how to get that effect with a fader and a low pass filter. Automating those aren’t the worst, and are certainly easier than trying to automate Haas. Plus, having a visual cue to get a sense of where something was in the sound field was useful too. I began experimenting, starting with replacing the little ear candy I was going to pan automate anyway, and then scaling up to positioning static elements and eventually the whole mix! Did it sound good?

NO! Of course not! The more I used the plugin, the weirder everything got. No algorithm, or dummy head, no matter how average, is going to ensure a uniformly pleasant listening experience. The more you rely on binaural sounds, the more likely you are to start alienating listeners who simply can’t handle the weirdness of sounds not sounding like they’re supposed to sound. With the entire mix binauraled, it doesn’t sound like I’m in the room, which is what I wanted. It doesn’t even sound like stuff is around me! It just sounds odd, and worse, phasey. Because that’s the other big drawback of this. While Haas may sound cool over headphones, it can be absolutely atrocious over speakers. Minor delays, like the <1ms ones we’re looking at with Haas, impart phase issues into the sound. This will cause all sorts of nasty comb filtering when sounds are summed, and even weird sensations in the air, depending on where you’re sitting in the listening environment. As such, this kind of effect really really shouldn’t be used for everything. You still need to worry about how it’ll translate to other listening environments. People love headphones, but they also love those bluetooth soundbars (mono), phone speakers (mono), laptop speakers (stereo), car speakers (stereo), and yes, even high end studio monitors in well treated acoustic environments (stereo). If your mix doesn’t work on all these applications, no amount of cool effect is going to save you.

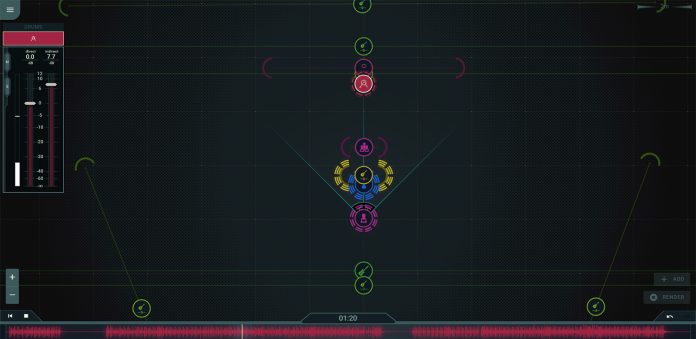

And with that, let’s talk about Mix Cubed. Mix Cubed is a new, cloud based mixing platform that aims to bring binaural and spatial mixing to the masses. Instead of requiring (tens of) thousands of dollars for a multi-speaker Atmos setup (or, you know, a free plugin) you get a mixing platform for placing your audio in an actual 3d (for now 2d, but they’re planning on releasing a third dimension in the future) space. The initial and basic layout is actually really good. Similar to Neutron’s visual mixer, this gives you a grid and little bubbles for each of your tracks. You drag your track bubbles around and that controls their locational perception, both position and distance, from the listener. Depending on the number of tracks, this is pretty easy. Making additional level adjustments is a snap too. You just need to open up the mixer, which is like any other DAW mixer, or select the individual track bubbles to be able to control not only the overall level but also the relative level of the indirect reflections. Extra points there. The basic operation of the platform is intuitive and easy so really anyone can come in and start playing around and make something in no time. I can get a good balance done before the song is even finished playing, which is huge. That means there’s more time for creativity!

There are drawbacks, like with everything. I really want it in plugin form, like Neutron, where I put a plugin on every track and an overall mixer where I adjust things. I, however, can’t have it in plugin form. Because it’s cloud based, they’re able to have far more complex algorithms and signal processing than even the beefiest of computers could handle. Good for quality, good for the democratization of audio, but not ideal for workflow. As is you’re stuck using two DAWs, which is exactly why I don’t use my Antelope plugins. It’s a big hassle, limiting Mix Cubed’s utility when it comes to free flowing creativity when it comes to signal processing. Currently there’s no way to actually affect the sound. No EQ, no compression, no saturation or delay or modulation or reverbs. Thankfully, both automation and control over your acoustic space are forthcoming, as the 3d effect is most apparently when things move. The team seems dedicated to adding as many features as they can, which is very appreciated.

In my initial playings around, I wasn’t impressed with the quality of the mixes, though I discovered this was because I was using raw tracks. When I printed my signal processing from a finished mix, that’s when things started sounding really good. I was able to have the processing I did to make things sound good, and also get additional separation and nice playing from having already balanced my tracks. Without signal processing in Mix Cubed, I have to mix in my normal DAW in stereo, print my stems with my signal processing, import them into Mix Cubed, and then try and recreate the balance and spatial placement or the original mix, which is a hassle. And also likely the way that the service is going to be used. I want my plugins, and until we can load up our own VSTs and create our own cloud based plugin library (which would be so, so, so cool) we’re not really going to be able to completely transfer over. Maybe we shouldn’t? Remember how I said that there’s still lots of reasons to keep listening to stereo? While Mix Cubed translates surprisingly well to non-headphone listening devices, it’s not perfect. And, if Apple’s new spatial audio addition is any indication of where streaming is headed, we’ll end up being able to choose between stereo and spatial instead of being forced to use one or the other. Meaning we’re going to need two versions of our mixes, which I don’t mind doing. I’ve been wanting this for years and to have something that’s easy(ish) and affordable (they say their pricing is similar to a streaming service) and sounds good (and likely will keep being updated to sound even better), I’ll gladly make two mixes. And also charge extra for them.

I’ve started doing this on a record I’m working on and I’m blown away. For a smaller, more intimate song, I got the mix about 90% done before I’d finished uploading the tracks, just by placing things in space based on where I thought I wanted them to be. A few tweaks and it’s not done but definitely releasable, all in less than five minutes. The larger guitar rock track is proving more difficult, but it’s getting there. Lots of tracks, lots of things going on. I’ll need automation, since printing my panning automation doesn’t give the same effect that I’m after, and will need to decide whether or not I want to recreate the stereo mix or see where spatial audio takes me creatively. Either way it’s going to sound great, and an intuitive interface is going to make whichever direction I go easy and quick. By making things cloud based, with an affordable subscription, and easy to use, Mix Cubed is truly bringing what is (could be) the future of audio to the masses. And it’s fun. So, so much fun.

Check out an example video (use headphones) of what the software can do, and maybe I’ll do my own tutorial as to how I see using this with my mixing workflow!

Mix Cubed is currently in beta. You can sign up for an invitation here. And you should.

Mix Cubed

Lilian Blair is a producer, engineer, and audio educator in the Seattle area. She specializes in studio recording, mixing, and helping artist achieve their musical dreams.

www.lilianblair.com